Back in October 2014, Microsoft announced that Docker would be supported on Windows Server 2016, and since then a great deal of work has been done to get Docker running both on the server and on Windows 10. With the expectation that Windows Server 2016 is going to hit RTM this month, we thought it a great time to make sure our Docker story was up to scratch.

At the time Paul Stovell wrote some initial thoughts on what this integration would look like, and since then, there has been a lot of change in the landscape, especially around a cross-platform .NET Core, and Windows Nano Server.

We've done a bunch of internal spikes and had a whole lot of discussions around how we think Docker and Octopus would work best together, and to make sure that we continue to deliver the best Octopus we can, we thought it an opportune time to put our ideas out to the community and make sure we are on the right track.

Is Octopus still even relevant?

Even though we might be a bit biased, we definitely believe that there is a place for Octopus in the Docker world. While there are other reasons, our main thoughts are:

- Environment progression: Octopus provides a great view of your environments and the lifecycles that your application goes through. This makes it easy to see what version of a container is running in each environment.

- Orchestration: While running a container is the primary thing that happens when you deploy a new version of your containerised app, often you will have database migrations, emails, slack notifications, manual interventions and more.

- Configuration: It is rare for a app to have identical configuration between environments. Octopus makes it easy to organise your configuration and scope each value to get the desired outcome.

- Centralised auditing: Octopus centralises your security, auditing and logging concerns for your deployments.

- Non containerised applications: Finally, while some people might be moving to Docker, there is still the long tail of other systems taking place, at least some of which will have no valid case to move.

But isn't this just for *nix folk?

As a tool that primarily targets .NET developers (though it works great with other languages too!) we know that there's not a large cross over with the traditional Docker audience. As such, we want to make it super easy to dip your feet into the water, and encourage you to do things "The Docker Way", but we also know that a lot of applications are not written with this mindset. We will most likely implement some features that go against Docker principles (such as immutable containers) to allow you to modify application configuration (web.config or app.config) as you start a new container instance.

Also, with more people targeting cross-platform .NET Core, making it easy to deploy and test on multiple platforms becomes much more important.

Deploying Docker containers

To align with Docker principles as much as possible, we are looking to support containers as a package (approach 3 from the original blog post). The container image would be built by your build tool, and pushed to a container registry such as DockerHub. This helps ensure that we are pushing the exact same bits between environments.

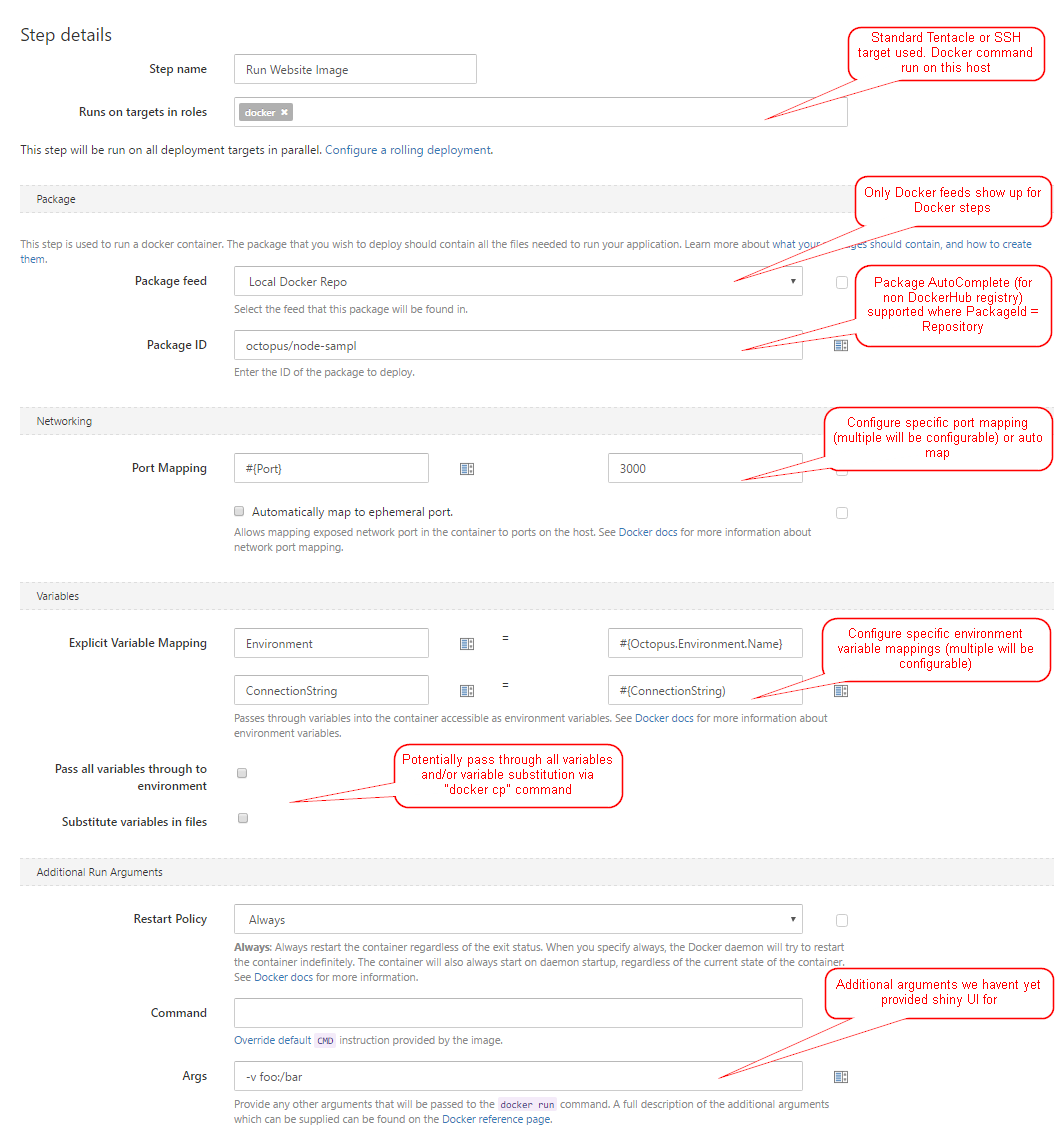

This would mean a new Package source type called Docker Container Repository, allowing access to both authenticated and unauthenticated registries.

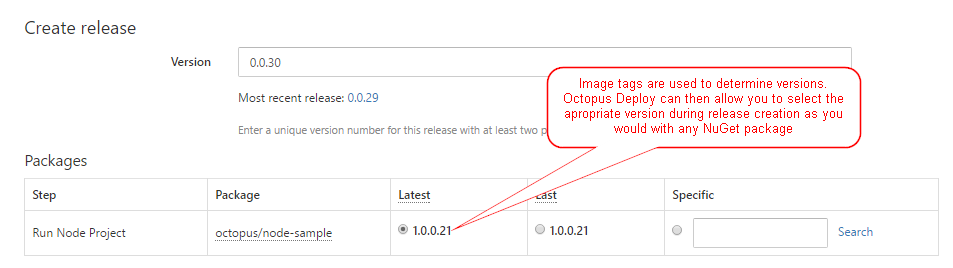

We are planning to use tags as the package versions. This would constrain the values you can use for the tags to only use SemVer2 valid version numbers (Docker allows freeform text). However, as this version number is already being used for NuGet packages, we don't see this as a controversial point.

At this point, we are expecting to use existing SSH targets for Linux and a normal Tentacle for Windows Server. Using this approach, Octopus will execute the Docker commands on the local instance, communicating with the local Docker daemon using Unix sockets or windows named pipes.

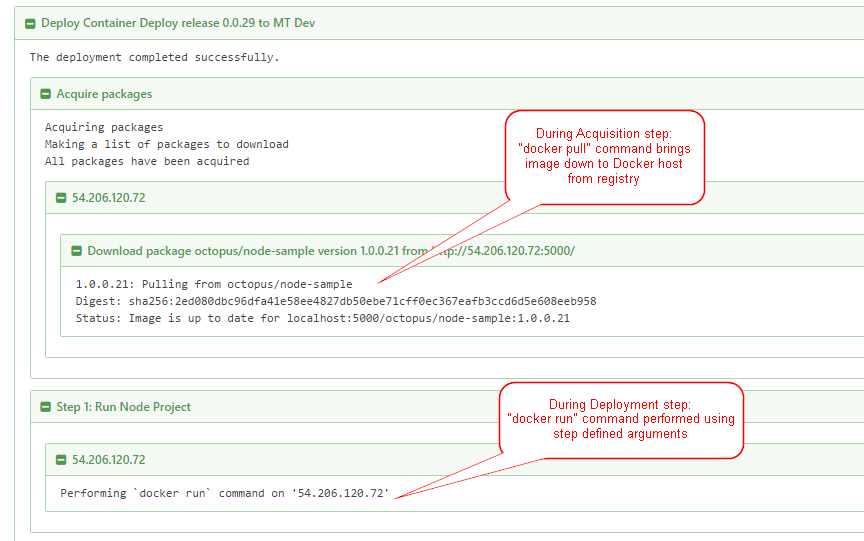

During a deployment, packages will be downloaded via docker pull during the package acquisition step, direct from the registry to the target machine. Docker's built in delta functionality means that there probably won't be much gain in us downloading on the Octopus server and shipping the diff to the Tentacle.

Initially, we see that two new step types will be added - one to run a container, and one to stop a container. The Docker Run step will allow you to specify an image from the registry, which environment variables to pass in, and which ports to map (or to use ephemeral ports). The Docker Stop step will allow you to stop a running container and (optionally) remove it.

At this point, it will require you to explicitly define which environment variables you want to pass in, though we are considering the idea of passing all available variables in as environment variables.

The Docker Run step exposes output variables for the container id, the port (if you are using ephemeral ports) and the output of docker inspect, which can be parsed in later steps.

Open Questions

We still have open questions about how to handle sensitive variables - passing them in as environment variables is a bit of a security concern as it leaves them exposed in various places. Unfortunately, best practices around passing secrets into a container is still considered an open question by the Docker community.

Output variables also need a bit of polish - which variables are important to you? Separately to this, we have pondered whether we should support variables as objects, so maybe exposing the entire docker inspect result as a PowerShell object would be useful?

Docker supports the idea of container health checks to know when your application is up and running. Should Octopus integrate with this? What would be useful behaviour here?

As mentioned earlier, we know that a lot of applications deployed with Octopus are .NET applications, and rely on configuration in web.config or app.config, and therefore need modifications between environments. Most of the time, this is done using configuration transforms. However, this goes against the "containers should be immutable" principle. While we do recommend sticking to this principle, we are debating whether to support configuration transforms on container start. This has the potential downside of causing an app recycle during application load. This is one of the biggest areas where we'd like your feedback.

Our initial implementation is aiming to use SSH and traditional Tentacles to locally interact with the Docker daemon. Potentially, this could be transformed into a Docker target, and use the HTTPS API instead. This would give us a bunch of benefits such as allowing us to have health checks, removing the need for a Tentacle on the Docker host and make it easier to support the cloud container hosts such as Azure Container Service (ACS) and Amazon's Elastic Container Service (ECS). On the downside though, it would mean you'd have to expose the Docker daemon over HTTPS and configure certificates on both sides. It might be that to support ACS and ECS, we need to do this anyway.

What about that big elephant in the room?

We are well aware of many advanced container orchestration tools such as Docker Swarm and Kubernetes. It would be crazy to try and duplicate the functionality of these tools and this is not our intention. Instead, we think Octopus will work best to enable people to get up and running quickly with Docker deployments. Integration with these advanced tools could subsequently be achieved with Step Templates. But, as we are not experts here, please feel free to suggest ways that we could integrate well.

Leave a comment

Does our approach meet with what you would like to see? What parts sound great and what parts need more attention? What are we missing? Let us know in the comments below.

We'd especially love to hear from you if you're already using Docker (doubly so if you're already using it with Octopus) as we want to make sure that we build the most useful thing we can.